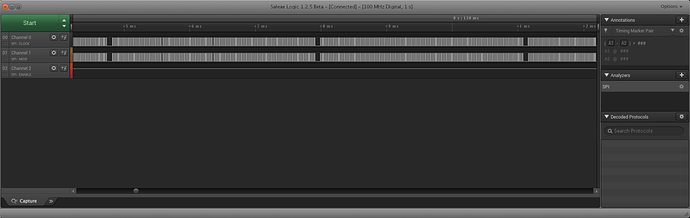

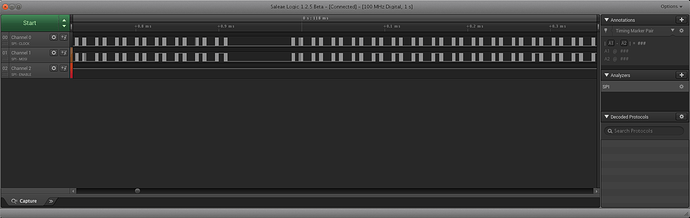

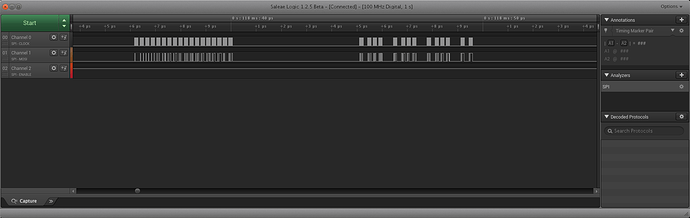

There are some issues that have been identified with the block mode of the spi-qup driver. For transactions over a specific amount (31 bytes I believe), block mode is used for transactions. DMA is not used unless a very specific set of prerequisites are satisfied (like aligned to the cache line size).

I worked up a set of patches that should fix this issue. I have not seen any errors in transactions since applying them. I was seeing multiple failures at various block sizes without these patches.

git://git.kernel.org/pub/scm/linux/kernel/git/agross/linux.git

branch: spi-wip

8ec8b82 spi: qup: Fix block mode to work correctly

807582a spi: qup: Use correct check for DMA prep return

767532d spi: qup: Wait for QUP to complete

2c438ae spi: qup: Fix transaction done signaling

These four are the ones you need. Please let me know if this fixes your issue. I need to redo some of the commit messages, but based on testing from srini and hopefully you, I can send them to the lists soon.

Regards,

Andy